Part A: Image Warping and Mosaics

Step 1: Taking Images

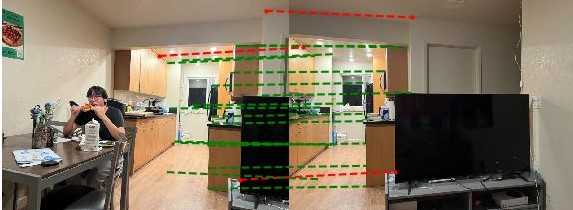

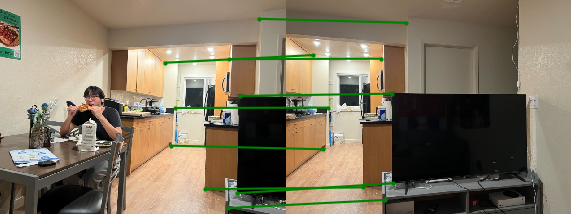

My Living Room

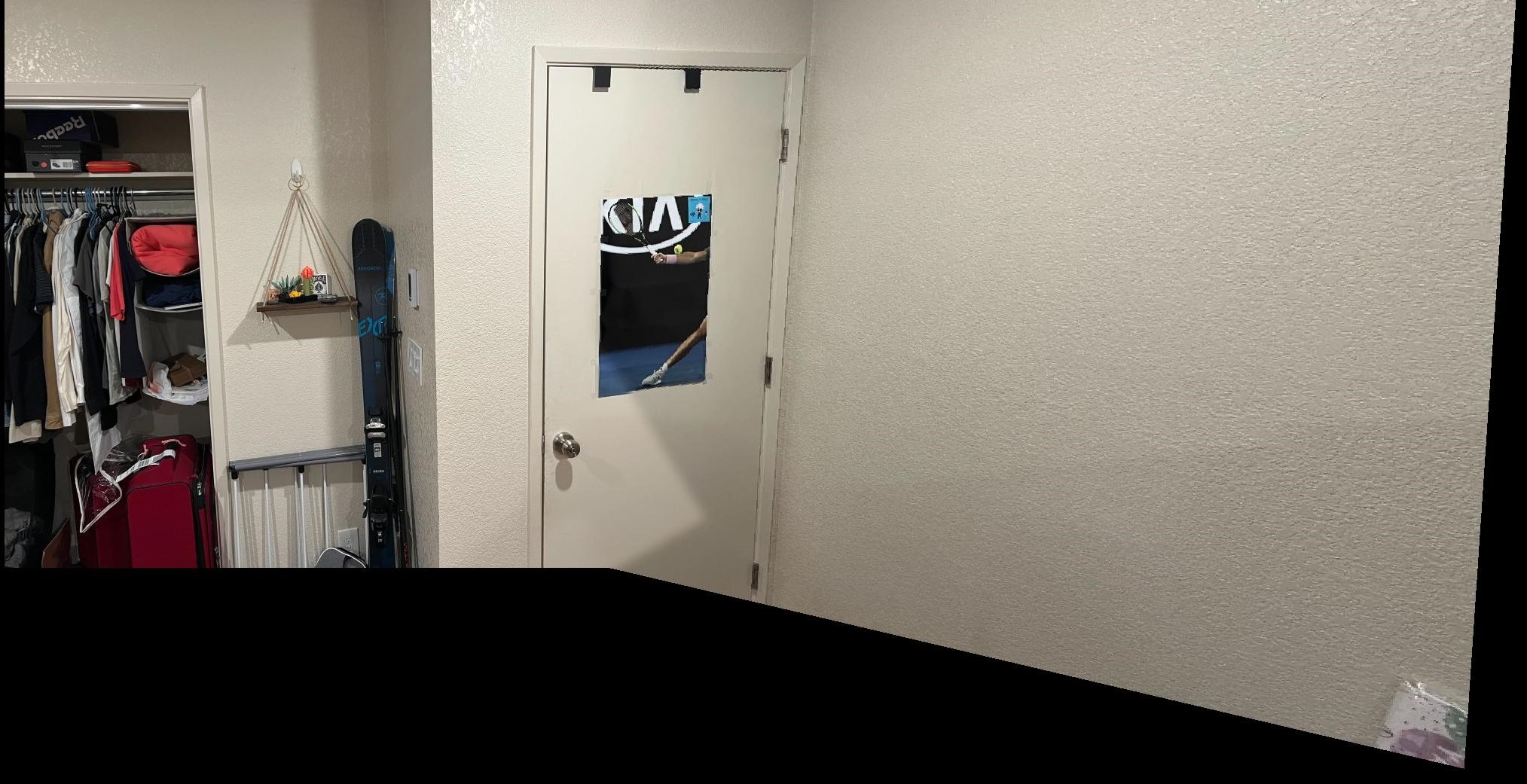

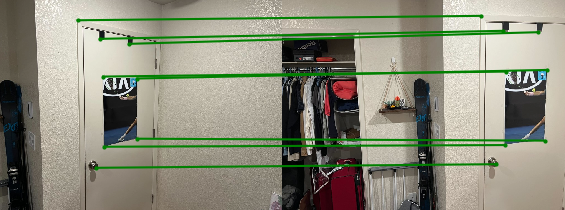

My Housemate's Bedroom

Outside my Apartment

Step 2: Recovering Homographies

In order to get a projective mapping between the common features in the first image and the second image, we have to first find pairs of corresponding points. I do this by hand labelling matching corners in the images. I chose point first and second images. When labelling points that match in images, I tend to pick points at corners of recognazible features as they allow for accurate correspondences between images. As you can see in the examples I used features such as the corners of TVs, doorframes, walls, countertops, posters, and tables.

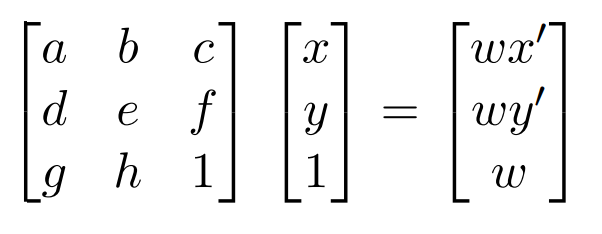

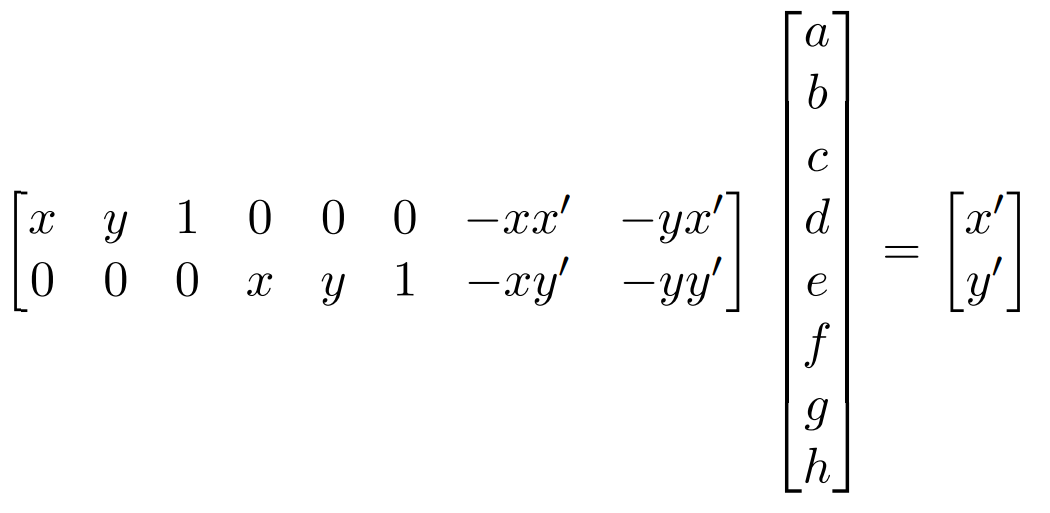

After getting pairs of points, we want to find a mapping that can morph points in the first image into their corresponding location in the second image. We want to find a projective transformation that solves the following systems of linear equations.

We can compute this H matrix by modifying this original equationt o get an alternate system of equations with the variables of H as the parameters.

We can then use the points that we have manually labelled as the values for x, y, x', and y' to create a solvable system of equations. However, due to noise in both the image capturing and correspondnece matching we need to find an approximate solution using Least Squares to apprximate a solution for the values of H.

Step 3: Warp the images

We will be using inverse warping in order to warp the images. To do this we first need to create a bounding box for the final warped image. I do this by using the homograph that we calculated in the previous part to map the corners of the original image to their new location in the warped image. After this, the process was similar to the inverse warping that we used in project 3. We use the homography that we calculated in the previous step in order to get a mapping for each pixel to its original location, and then used interpolation to get the specific color.

Step 4: Image Rectification

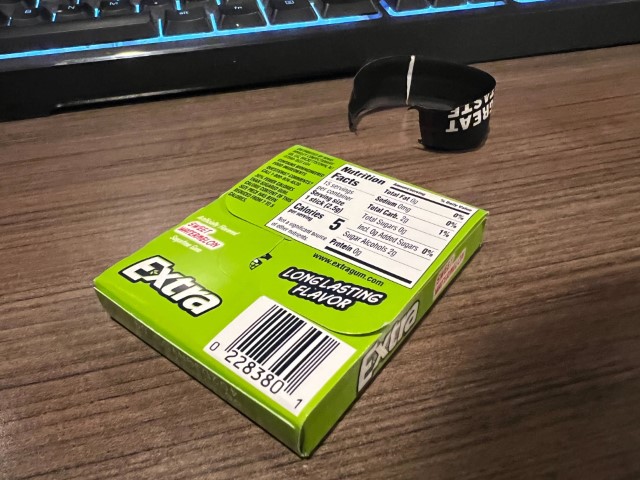

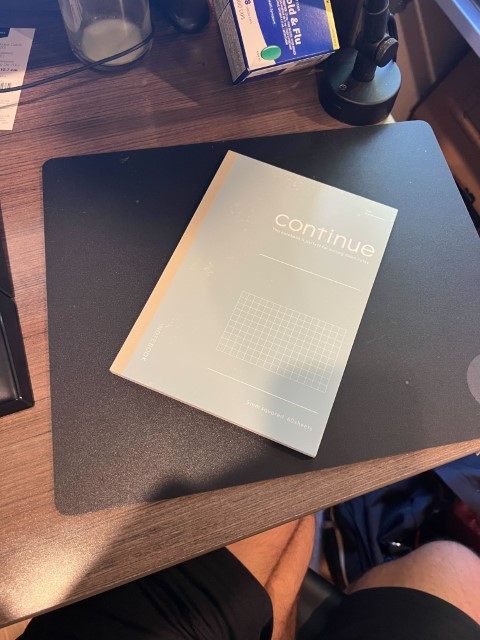

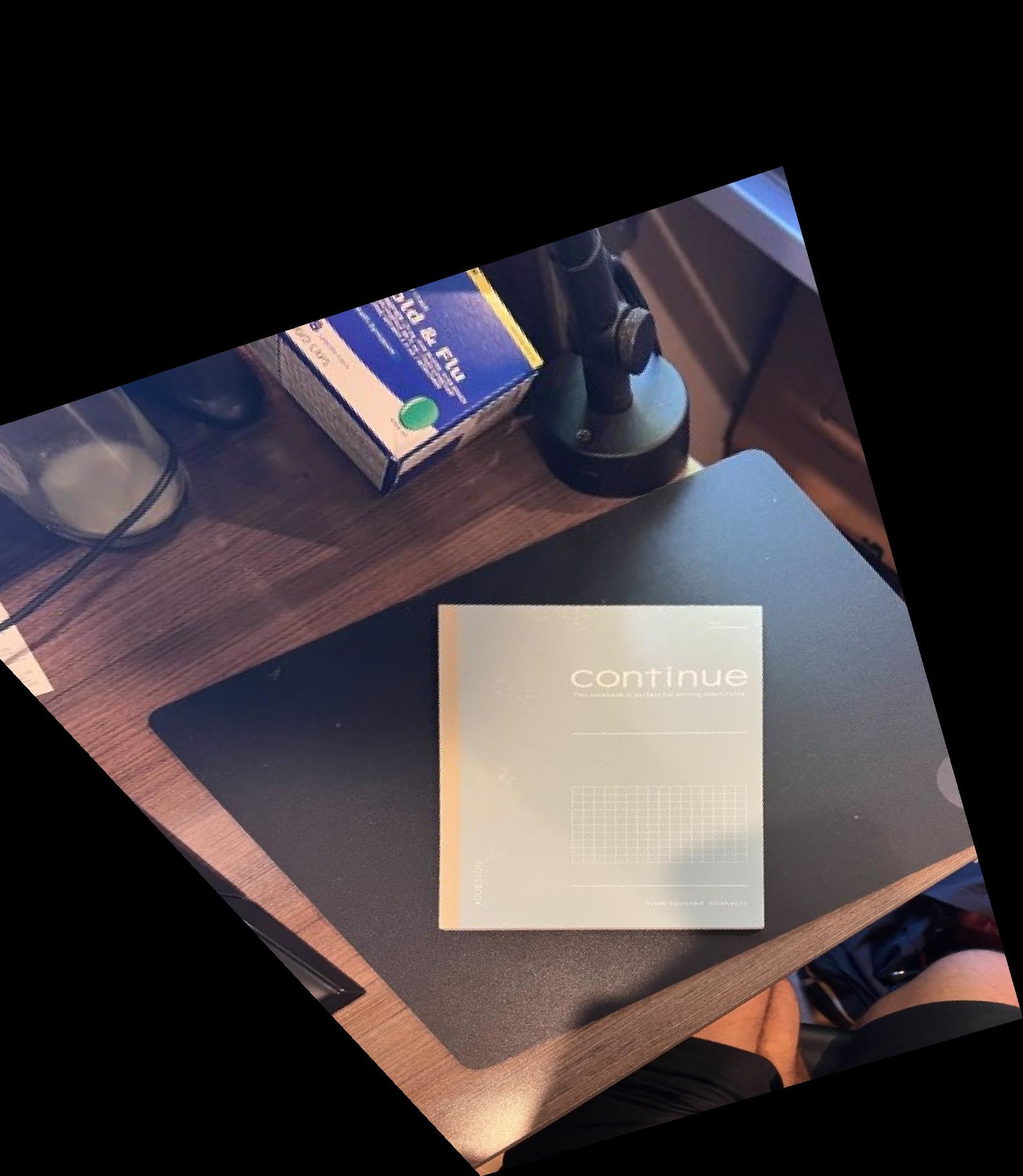

To test the code from part 3, I used image rectification to rectify rectangular objects. I did this by first taking a picture of a notebook and piece of gum on my desk. Since I didn't take a picture of these from directly above, they were not perfect rectangles in the original image.

I then performed image rectification, which transformed these by morphing these rectangular objects onto a rectangle to orient it to be aligned with the edges of the image. Below we can see the results of the rectification of these images.

Step 5: Mosaics

After warping the original image, we need to properly blend the 2 images together. Simply averaging the 2 images together will not work properly because small differences in lighting will become apparent, and there will be a clear boundary between the 2 images.

As you can see in the previous image, although the images are properly aligned doing a naive warp leaves a very clear border between the 2 images. To combat this, we instead do multi-resolution blending to remove this seam. To do this, we first need to create an alpha mask that tells the blending algorithm how much of each image to use at each pixel. We can do this by first, calculating a distance transform on the bounding box of each image. This gives the distance from each pixel in either image to the edge of it's bounding box. We can then run a pixel-wise comparison on these 2 bounding boxes in order to develop a larger mask that can be used to blend the 2 images.

For each of these 2 distance transforms, if image 1's distance is greater, we will set the mask at that pixel to 0, and the opposite if image 2's distance is greater. Doing so gives us this final mask that we can use for 2-level multi-resolution blending.

Finally we can use the multi-resolution blending that we implemented in project 2 to build the warped 1st image and second image using the mask we just created. Doing so gives us the following resoluts for the 3 images that I took in the first part of the project.